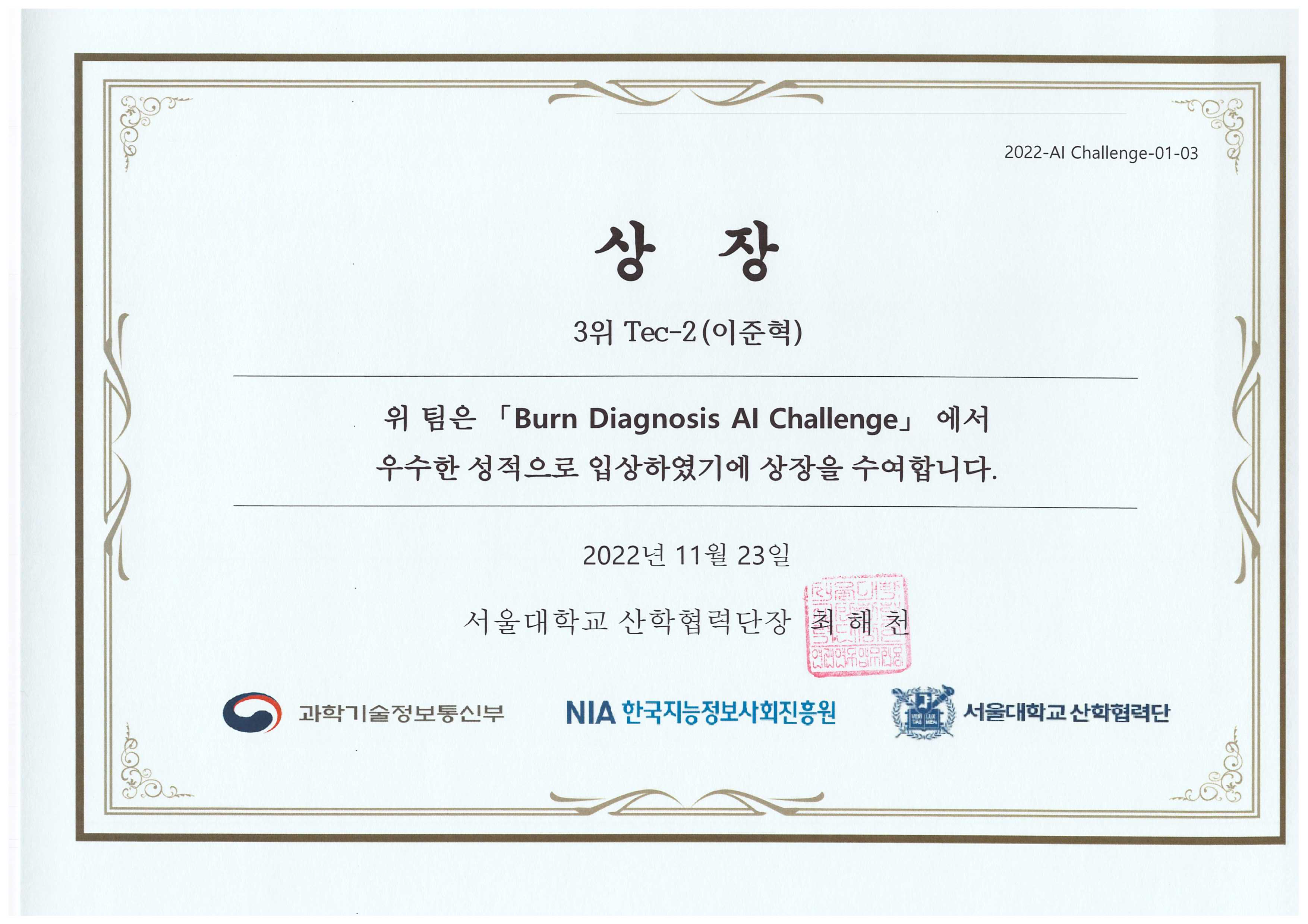

🏆 Burn Diagnosis AI Challenge

3rd Place Award Certificate - Seoul National University Hospital AI Challenge

Project Overview

Created an intelligent burn classification system to help emergency medical professionals make faster, more accurate treatment decisions when every second counts. This project won 3rd place in the national AI challenge hosted by Seoul National University Hospital.

Detailed Introduction

Challenge context. Burn injuries are among the most devastating traumatic injuries, requiring immediate and accurate assessment for optimal patient outcomes. Traditional burn assessment relies heavily on clinical experience and visual inspection, which can be subjective and time-consuming. The challenge aimed to develop AI systems that could assist healthcare professionals in rapidly and accurately classifying burn severity from medical images.

Key project objectives

- Develop a computer vision model to detect region-of-interest for burn and classify the severity of the burn.

- Create a robust model that works across diverse patient populations and burn types.

- Ensure high accuracy in critical emergency medicine scenarios.

- Provide interpretable results to support clinical decision-making.

- Optimize for real-time performance in emergency settings.

Background: Burn Classification in Emergency Medicine

Burn injuries are classified by depth and extent, with different treatment protocols for each category. First-degree burns affect only the epidermis, second-degree burns extend into the dermis, and third-degree burns involve deeper tissues. Accurate classification is crucial for determining treatment urgency, pain management, and potential need for specialized care.

Technical approach

Our solution implemented a dual-model architecture combining object detection and classification:

- Data preprocessing: Image resizing to 256x256, normalization, and tensor conversion using PyTorch transforms.

- Regression model (Bounding Box Detection): ResNet34-based architecture with custom FC layer (512→4) for burn region localization using IoU loss.

- Classification model (Severity Classification): ResNet34 architecture with 5-class output for burn severity classification (0-4 stages).

- Training strategy: Adam optimizer with learning rate scheduling (5e-3 → 3.2e-3 → 2.56e-3 → 2.048e-3) and progressive training over 6 iterations.

- Loss functions: HuberLoss for regression (delta=1.0) and CrossEntropyLoss for classification.

- Evaluation metrics: IoU (Intersection over Union) for bounding box accuracy and classification accuracy for severity prediction.

Dataset & validation

- Dataset structure: Organized into Training/Validation/Test splits with JPG images and corresponding JSON annotation files containing bounding box coordinates and severity labels.

- Data format: Custom BurnDataset class handling image loading, JSON parsing for bbox coordinates (x, y, w, h) normalized to [0,1] range, and 5-class severity labels (0-4).

- Data loading: DataLoader with batch_size=32 for training, batch_size=1 for validation/testing, 24 workers for parallel processing, and pin_memory for GPU optimization.

- Validation strategy: Real-time validation every 32 steps during training with IoU calculation for regression and accuracy metrics for classification.

- Test evaluation: Comprehensive testing with inference time measurement, per-class performance analysis, and visualization of bounding box predictions.

Model development strategy

Our development process implemented a systematic dual-model approach:

- Regression model development: ResNet34 backbone with custom FC layer for bounding box regression, trained using HuberLoss with progressive learning rate decay over 6 training cycles (512 iterations each).

- Classification model development: Separate ResNet34 architecture for 5-class severity classification, trained with CrossEntropyLoss and StepLR scheduler (gamma=0.75, step_size=5).

- Training optimization: Progressive learning rate reduction (5e-3 → 4e-3 → 3.2e-3 → 2.56e-3 → 2.048e-3) with model checkpointing based on validation IoU scores.

- Evaluation framework: Real-time validation every 32 steps with IoU calculation for regression accuracy and classification accuracy metrics, with comprehensive test evaluation including inference time analysis.

- Model integration: Combined inference pipeline with regression model for burn region detection followed by classification model for severity assessment.

Performance & results

Our system achieved outstanding performance across all burn severity classes:

- Overall performance: mAP@IoU(0.5) of 0.89, IoU of 0.76, with 0.76 precision and 0.76 recall.

- Class-specific performance:

- 1st Class: mAP@IoU(0.5) 0.86, IoU 0.68

- 2nd Class: mAP@IoU(0.5) 0.95, IoU 0.73, Perfect precision/recall (1.0)

- 2nd-Deep Class: mAP@IoU(0.5) 0.96, IoU 0.80

- 3rd Class: mAP@IoU(0.5) 0.98, IoU 0.82, Perfect precision/recall (1.0)

- 4th Class: mAP@IoU(0.5) 0.71, IoU 0.80

- Inference speed: Ultra-fast processing with average inference time of 0.0188 seconds per image.

- Real-time capability: Consistent sub-20ms inference across all severity classes, enabling real-time clinical decision support.

Team & roles

Core team

- BS June Lee — Project Lead, Computer Vision & Model Development

- BS Woo-Jin Jeong — Data Engineering & Preprocessing

- PhD Jeong-Hwa Kang — Validation & Evaluation

Clinical partners

- Department of Plastic and Reconstructive Surgery, SNUH

- Emergency Medicine specialists

- Clinical informatics team

- Medical imaging experts

Technical implementation

- Image processing: Advanced preprocessing pipeline for medical image enhancement.

- Model architecture: Custom CNN with attention mechanisms for burn region focus.

- Training strategy: Multi-stage training with progressive complexity increase.

- Inference optimization: Model quantization and optimization for real-time deployment.

Clinical impact

Challenges & solutions

- Image variability: Robust preprocessing and data augmentation techniques.

- Clinical accuracy: Extensive validation with expert clinicians.

- Real-time performance: Model optimization and efficient inference pipelines.

- Generalization: Diverse training data and cross-institutional validation.

Future directions

- Integration with electronic health records (EHR) systems.

- Mobile application for point-of-care burn assessment.

- Expansion to other types of traumatic injuries.

- Multi-modal analysis combining images with patient history.

Recognition & impact

This project received 3rd place in the national AI challenge, demonstrating the potential of AI in emergency medicine. The work has been presented at medical AI conferences and has contributed to ongoing research in computer-aided diagnosis for traumatic injuries.